Explanation, semantics, and ontology

A promise of advances in interoperability between different data representations.

Today I’m attempting to understand Explanation, semantics, and ontology from Data & Knowledge Engineering published 2024-06-25. Although I set out to understand how ontological unpacking can help to solve the problem of interoperability between different representations of data I still miss the practical intuition that comes from expert experience of UML tools.

Ontologies and conceptual models

We are swimming in an ever growing ocean of available data. For specific domains, knowledge experts, computer scientists and philosophers aim to make sense from it, by defining entity types, categories and investigating relations between them. These efforts result in creation of ontologies: formal representations of a set of concepts and their relationships. Typically an ontology has a well defined scope, applied to a particular domain.

For every technical domain, people develop language to describe the phenomena within it. They build conceptual models. These conceptual models require “formal semantics”. A large number of words need to have precise specific meanings. And they need to be explained through the lens of “ontological commitment” to the world. That is the relationship between the well defined words should reflect reality. Some of those statements must be “given”. For example: patient X attended hospital Y for treatment Z. Others can then be derived from them via definitions of this particular conceptual model. But facts about the world do not automatically emerge purely from the conceptual model. These facts are known as truthmakers - linking abstract definitions to actual entities in the world.

Ontological unpacking and weak truthmaking

Authors of the paper, advocate for construction of conceptual models, where it’s possible to arrive at “unpacked” ontological commitment which do not merely describe what or how something is but explain why it is. This ontological commitment reflects the alignment between the model and the actual structure of reality, specifying what entities or types must exist for the model's descriptions to hold valid truth.

After distancing themselves from the semantics and ontologies of strictly mathematical and computer science theories of logical sets, the authors begin to enter the waters of quantitative, and numerical truths - going beyond binary truth and false statements. They introduce the concepts of strong and weak truthmaking. Where the weak one is a question of degree, the way of being rather than absolute existence. Authors give an easily understandable example of someone’s height. And assert that allowing for these numerical facts to be weak truthmakers opens up the avenues of contrastivity and counterfactuality in reasoning.

Scaling the knowledge base through semantic interoperability

Authors quote a real life example of a question posed to a knowledge base that requires understanding across multiple semantic domains.

Which organisations have a contract with a governmental institution and have donated money to the political campaign of any politician who governs that institution or who has a personal relationship with someone who does.

As a side exercise I resorted to highly capable modern LLMs to see if it is even possible to construct a SQL or SPARQL query to attempt to answer the above question - given reasonable assumptions about the way data is structured:

SQL

FROM organizations o

JOIN contracts c ON o.organization_id = c.organization_id

JOIN institutions i ON c.institution_id = i.institution_id

JOIN politicians p ON i.politician_id = p.politician_id

LEFT JOIN donations d ON o.organization_id = d.organization_id

LEFT JOIN people rp ON p.related_person_id = rp.person_id

LEFT JOIN politicians rel_pol ON d.politician_id = rel_pol.politician_id

WHERE (d.politician_id = i.politician_id

OR d.politician_id = rel_pol.politician_id

OR EXISTS (

SELECT 1

FROM politicians p2

WHERE p2.related_person_id = rp.person_id

AND d.politician_id = p2.politician_id

)

);

SPARQL

SELECT DISTINCT ?organizationName

WHERE {

# Organization has a contract with an institution

?organization :hasContractWith ?institution .

?organization :name ?organizationName .

# Politician governs the institution

?institution :governedBy ?governingPolitician .

# Check if the organization has donated to the governing politician

OPTIONAL {

?organization :donatedTo ?governingPolitician .

}

# Check if the politician has a personal relationship with another person

OPTIONAL {

?governingPolitician :hasPersonalRelationshipWith ?person .

?relatedPolitician :hasPersonalRelationshipWith ?person .

?organization :donatedTo ?relatedPolitician .

}

# Ensure that at least one of the donation conditions is met

FILTER(BOUND(?governingPolitician) || BOUND(?relatedPolitician))

}

Authors correctly point out that in order not merely to satisfy computer science requirements, but actually find out the answer behind the meaning of the question one needs to be aware of additional relationships between entities and “how the world works”. For example - properties of parthood are transitive. Which may not result in the graph database query. And “hasPersonalRelationshipWith” is a relationship whose definition is at the essence of the meaning behind the question.To quote in detail:

“Finally, one has to understand a plethora of temporal aspects including being able to answer in which period: (a) campaign donations are made; (b) contracts are established between (potentially donor) companies and organizational units; (b) a certain organizational unit is part of another; (d) people are assigned to play certain roles in organizational units; (e) personal relationships (whatever those mean) hold. Understanding (a-e) requires explaining what makes the relations in this scenario hold between those specific relata when they do, that is, what are the truthmakers of these relational propositions.”

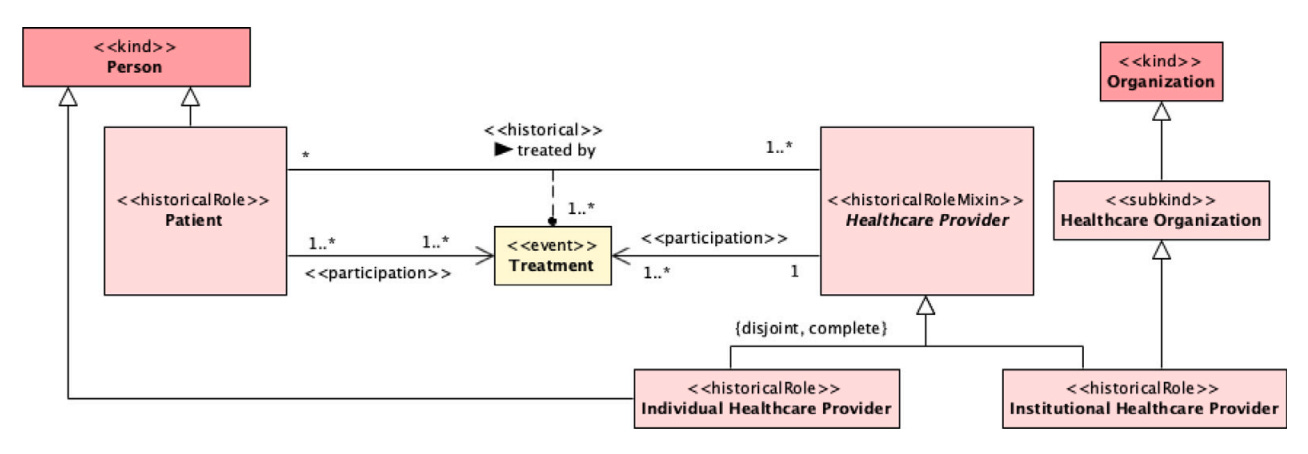

The authors set out a promise of “ontological unpacking” in order to combine truth representations of different knowledge bases. They start with an example of a patient being treated by a healthcare provider.

Doctor, doctor! I’m a patient patient

In order to represent the reality of a specific patient (entity) being treated by a healthcare provider (entity), authors introduce a few relation types:

Comparative relations (e.g., one condition being more severe than another) are derived from intrinsic properties of the entities involved (like medical conditions).

Material relations (e.g., "treated by") require a relator to explain the connection between entities (like treatments connecting patients and providers).

Phases represent temporary states that individuals can move in and out of without changing their fundamental identity.

Because many patients can be treated by many healthcare providers, therefore it’s important to introduce cardinality constraints. A specific treatment may only apply to 1 patient - even when performed in total by multiple healthcare providers.

Finally the authors note that when it comes to a decision about patient A being treated ahead of patient B, this comes from a numerical value of “condition severity”, similar to earlier discussed height. And the truthmaker of treatment prioritisation is not a relationship between patients but a relative comparison of severity of their condition. Since this is transitive it allows for reasoning and decision making around healthcare triaging.

The authors discuss the use of ontology design patterns as part of the ontological unpacking process. These patterns help systematise how different relations and entities are represented, ensuring that different models can be aligned based on their underlying structures. The paper shows that by recognising these patterns, it is possible to reduce ambiguity in how models represent the world and thereby support interoperability between different systems.

Practical implications

Authors introduce a practical tool for ontological unpacking based on OntoUML, a framework for conceptual modelling that allows to visualise potential instantiations of entity relations. And discover faults in ontology logic that can be addressed by re-reviewing the conceptual model. They observe how the model represented in Figure 2 above creates an unintended but possible interpretation that “in the absence of additional constraints, it also makes clear that the same person can be patient and healthcare provider in the very same treatment which might not be an interpretation intended“.

Conclusion

This paper allowed me to gain a much deeper appreciation of how complex ontologies associated with knowledge bases can be. Perhaps combining modern advanced in LLMs for data ingestion with debugging tools like visualisations shown in paper’s Figure 6 it’s possible to bootstrap to a larger knowledge base combining different sources and conceptual models.

#bits